Did you ever wonder if you can use your NiFi instance to control NiFi itself? Well you can! In this post I'm using an out-of-the-box Apache NiFi 2.3.0 instance to configure an SSLContextService controller service that points at the keystore and truststore NiFi uses for secure communications and an InvokeHttp processor to call its own API.

To get started, I created a GenerateFlowFile processor on the canvas. In my example you can specify the API URL you want to call and the HTTP method (GET, POST, e.g.). I'm doing a GET now so I didn't set the FlowFile content, but in the next post I will illustrate how you can set the content for a PUT/POST call. The response from InvokeHttp goes into a funnel so I can view the output in the Content Viewer UI:

I'm using "http.method" and "api.endpoint" as my attributes in GenerateFlowFile:

Then I created an "StandardRestrictedSSLContextService" controller service, setting the properties to point at NiFi's keystore and truststore:

The values for the passwords are actually parameters, so for Keystore Password the value is

#{keyPassword}

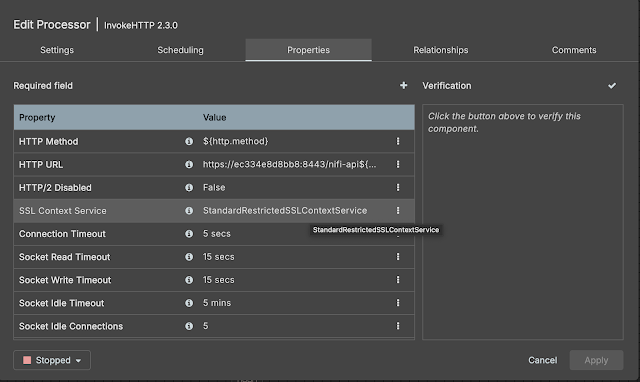

Next, I configured InvokeHttp to use the attributes from GenerateFlowFile and the controller service I created:

Finally, I set the password parameter values using the corresponding properties from nifi.properties (these were auto-generated when I started my NiFi instance):

Now I'm ready to run my flow. I start the InvokeHttp processor and, right-clicking on GenerateFlowFile and choosing Run Once, I get the output from my call (in this case it's the list of processors in this process group, so it will include GenerateFlowFile and InvokeHttp):

In the next part I'll illustrate how to call a PUT endpoint to start a processor. Cheers!